Objectives

- Parser logic to detect hard coded values from views

- Logic to replace all hard coded values with dynamically made i18n keys that fit the context. Then adding each key automatically to locale files

- Loop through all locale keys & generate translations for each key using AI while providing the context for each from where it's being used

- Handle edge cases where parser or AI fails

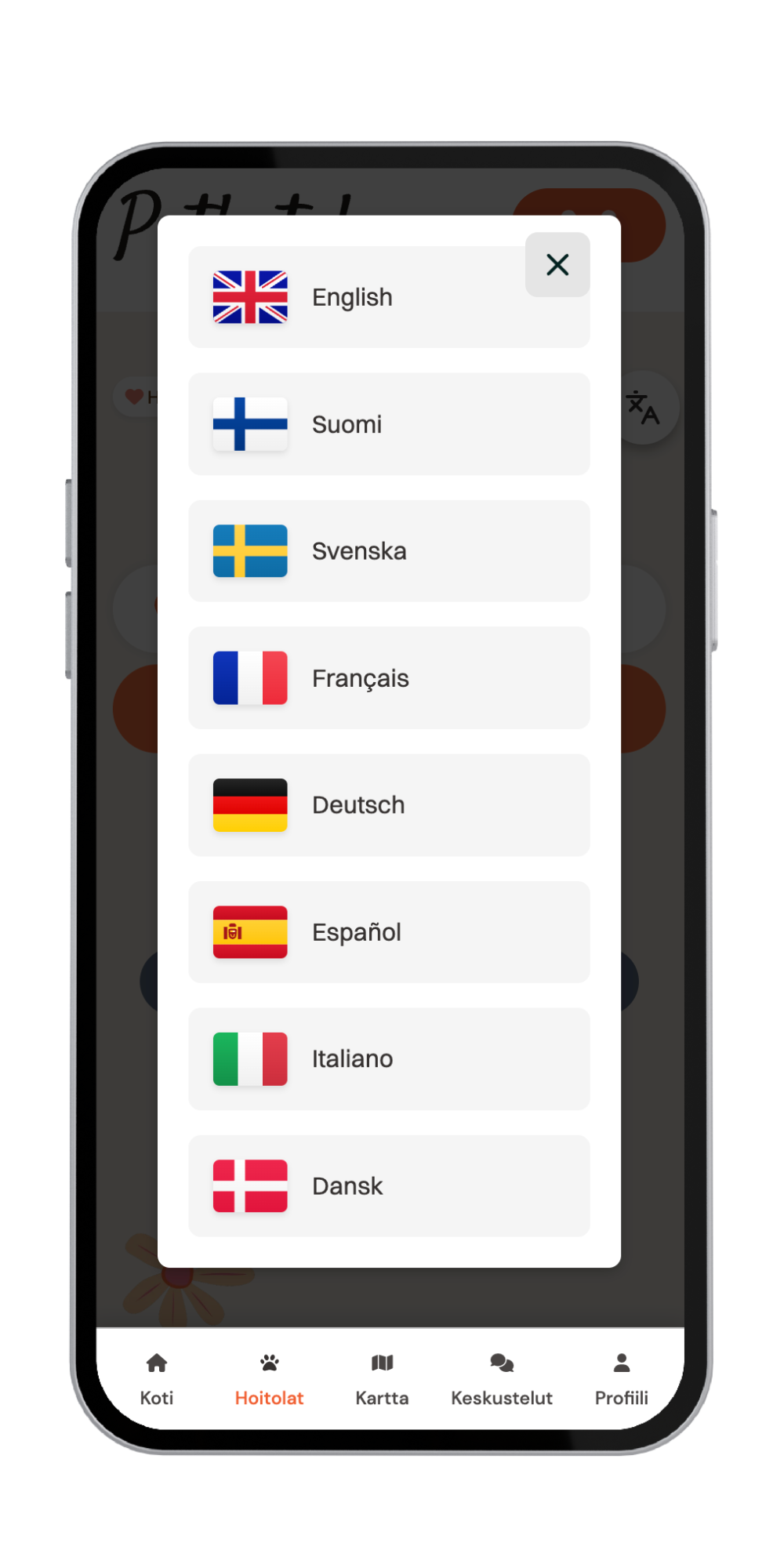

Overview

Pethotel.io started as a platform entirely in Finnish and while this served our initial user base, I was curious on my free time about making the site accessible to a broader audience. Rather than an urgent requirement, it felt like a fun challenge to see how far I could push automation in localization using AI.

I had some experience with internationalization (i18n) in Ruby on Rails, but automating the entire process sounded like an interesting challenge. Initially, I thought about translating the site into Swedish and English, but then I figured, why not see how many languages I could support if automation made it easy?

Experimenting

I started by creating Rake tasks to automate the extraction and replacement of hardcoded text. Using Nokogiri, a Ruby gem for parsing HTML and XML, I wrote a script that could traverse all the HTML.ERB files in the application. This script identified text nodes that needed internationalization and replaced them with i18n keys.

Running this Rake task allowed me to edit nearly all the view files at once. It was exciting to see how much manual work I could bypass. Of course, there were challenges—edge cases where the script didn't work perfectly, especially with complex nested elements or dynamic content. I spent time refining the script to handle these exceptions, which was part of the fun.

Automating Translations

With the text extracted and replaced with i18n keys, the next step was generating translations. I started with Swedish and English but soon thought, if the automation works well, why not add more languages like French, German, and Spanish? It became an interesting experiment to see how far I could push it.

I created a service called ThinkingService that interacts with OpenAI's GPT models via their API. This allowed me to generate translations automatically using Large Language Models. Setting this up involved dealing with API rate limits and ensuring the translations made sense, which was a learning experience in itself.

The service processes translations asynchronously, so it didn't bog down the application. I played around with different parameters like model choice and temperature settings to see how they affected the translations. It was a lot of trial and error, but that's what made it interesting.

Technical Details

The Rake task reads the YAML file for each locale and iterates over every translation key. For each string, it calls the translate_value method, which uses the ThinkingService to get the translated text from the AI model. The translated strings are then written back to the YAML file.

Here's a simplified version of the Rake task:

namespace :translations do

desc "Process translations in the YAML file"

task process: :environment do

# Load the YAML file

file_path = "config/locales/fr.yml"

@translations = YAML.load_file(file_path)

# Configuration for translation

config = {

model: "gpt-4",

max_tokens: 60,

temperature: 0.0,

from: "English",

to: "French"

}

# Collect changes to be made

changes = {}

# Iterate over each translation

@translations.each do |locale, keys|

process_translations(locale, keys, config, changes)

end

# Apply collected changes

apply_changes(changes)

# Write back to the YAML file

File.write(file_path, @translations.to_yaml)

end

end

The ThinkingService uses HTTParty to make HTTP requests to the OpenAI API. It constructs prompts and handles responses, extracting the translated content. I had to implement error handling to make sure that if a translation failed, it wouldn't crash the entire process.

Here's a snippet of the ThinkingService:

class ThinkingService

include HTTParty

base_uri "https://api.openai.com/v1"

def answer(selected_model, system_prompt, user_prompt, assistant_prompt, max_tokens, temperature)

messages = [

{ role: "system", content: system_prompt },

{ role: "user", content: user_prompt },

{ role: "assistant", content: assistant_prompt }

]

body = {

model: selected_model,

messages: messages,

max_tokens: max_tokens,

temperature: temperature

}.to_json

response = self.class.post("/chat/completions", body: body, headers: headers)

handle_response(response)

end

private

def headers

{ "Authorization" => "Bearer #{api_key}", "Content-Type" => "application/json" }

end

def api_key

ENV["OPENAI_API_KEY"]

end

end

Implementing the code required attention to variables and placeholders within the strings. Placeholders like %{count} needed to be preserved in the translated text. I added logic to detect and protect these during translation.

Challenges Faced

Contextual Accuracy in Translations

Ensuring the AI-generated translations were contextually accurate was sometimes a challenge. Technical terms and idiomatic expressions didn't always translate well. I tweaked the prompts and adjusted parameters to improve the results, which was an interesting part of the experiment.

Handling Variables and Placeholders

Preserving variables and placeholders was crucial to maintain functionality. I had to implement checks to ensure the AI didn't alter these elements during translation. It added complexity but also made the project more engaging.

Cost concerns turned out minimal

Using the OpenAI API came with rate limits and costs. Processing many translations could get expensive. My prompts were short & I had max tokens set to somewhere about 100-200 so the cost was very low for the whole thing.

Error Handling and Edge Cases

There were times when the AI returned incomplete responses. Implementing robust error handling was necessary to keep the process running smoothly. Handling these edge cases was part of the learning experience.

Results

Through this experiment, I managed to generate translations for multiple languages relatively quickly. It did that in around 3-4 hours once the working, tested code started running. What started as a curiosity turned into a functional multilingual platform with minimal manual effort. It was satisfying to see the platform become more accessible to a wider audience.

The automated system saved a lot of time and maintained consistency across the application. It also simplified maintenance, allowing for easy updates as the platform evolved. While there were challenges, the end result was rewarding.

Learnings

Let AI write your Regex code, it will figure it out much faster & more precisely

Specify logic for detection & let AI figure out the regex for it.

Really realized the power of code that writes itself

I knew about file editing capabilities of programming languages but never really had any use for it. This project made me realize how powerful it can be. It surely makes me think different in the future about everything.

AI has brought more possibilities when it comes to automatic file editing

AI can be used to generate translations, write code, and even refactor code. It's exciting to think about the possibilities it opens up for automation in web development.

Version control helped me to track what changes my automation made each time it ran

Version control was crucial in tracking changes and checking what was edited each time the automation ran. It helped me understand the impact of the changes and revert things if needed.

Looking back, I really enjoyed this experiment. I really thought that this wouldn't be possible but then something in me asked "why not?" and I started working. It was a fun project that ended up to be little meaningful enhancement for Pethotel.io.